The Case for an Edge-Driven Future for Supercomputing

“Exascale only becomes valuable when it creates and uses data that interests us,” said Pete Beckman, co-director of the Northwest Argon Institute of Science and Engineering (NAISE), in HPC’s latest user forum. Beckman, project manager for final calculations at Argon National Laboratory Take a walk, insisted on one thing: the final calculations are a crucial part of providing this value for the exascale.

Beckman opened with a quote from computer architect Ken Butcher: “The supercomputer is a device for turning computational problems into I / O problems.” In many ways, this is true today, “Beckman said. “What we expect from supercomputers is that they are so dazzlingly fast that it’s really cramped when reading or writing from input or output.”

“If we take this concept and reverse it,” he added, “then we come to the idea that edge computing is therefore a device for turning an I / O-related problem into a computational problem.”

Beckman outlined what he sees as the new paradigm of high-performance computing: one defined by extreme data production — more than ever that could be effectively moved to supercomputers — by massive detectors and instruments such as the Large Hadron Collider and radio telescopes. This paradigm, he said, has led to a series of research problems in which it would be more effective to examine edge data and filter only important or interesting data to supercomputers for heavy analysis.

Beckman explained that there are a number of reasons why edge processing may be preferable: more data than bandwidth, of course, but also the need for low latency and fast triggering, as in self-driving cars; confidentiality or security requirements that prevent the transfer of sensitive or personalized data; desire for additional sustainability through distributed processing; or energy efficiency.

Beckman, for his part, is advancing into this new paradigm – which he says “was made possible largely by AI” – through Waggle, which began as a wireless sensor system designed to enable more intelligent urban and environmental research. With Waggle, Beckman said, “the idea was to ‘understand the dynamics of the city’ through pedestrian monitoring, air quality analysis and more. The original generation of sensors was installed throughout Chicago, generating data that was then shared with scientists.

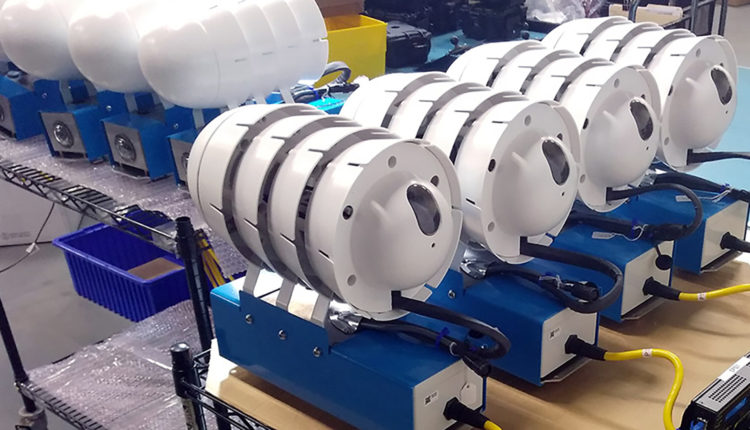

The latest version of the Waggle sensor, Beckman said, has just been developed and is much more powerful: an AI-enabled finite computing platform that breaks down input using an Nvidia Xavier NX graphics processor. The platform is equipped with cameras aimed at the sky and the earth, atmospheric sensors, rain sensors and mountain points for even more sensors. Beckman added that Lawrence Berkeley’s National Laboratory is working on its own Waggle configuration for one of its projects.

These Waggle sensors, Beckman explained, aim for an even bigger vision – one embodied by NSF-funded Sage project outside Northwestern University (also run by Beckman). Through Sage, he said, the goal was to “take these types of edge sensors and use them in networks in the United States to build what we call software-defined sensors,” flexible endpoints that later specialized for a specific purpose.

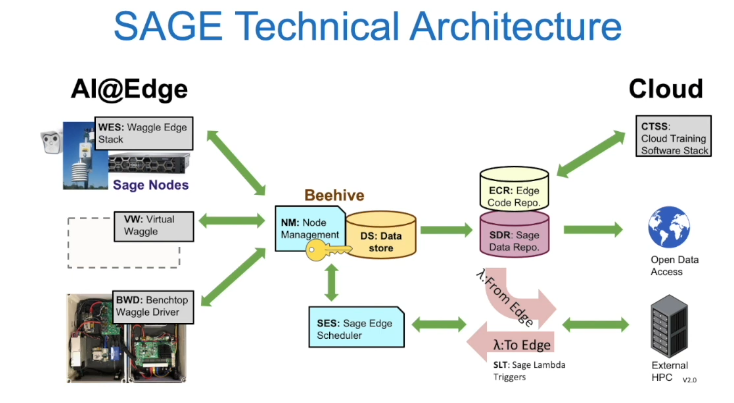

“Sage’s architecture … is pretty clear,” Beckman said. “We are processing data at the edge. … The data extracted from the AI edge enters the repository, the repository can share this data with HPC applications, which can then process this data. “Sage-activated Waggle networks, Beckman said, were simple and secure, with no open ports.” You can’t connect to a Waggle node, “he said.” Nodes only on the phone at home. “

Through Sage and Waggle, Beckman outlined a number of current and future uses. Huts equipped with Sage technology, he said, have already been installed along with environmental monitoring equipment for the 81-site NEON project, which has been running since 2000. Various other partnerships – including one between Sage and ALERTWildfire – used edge processing technology such as Waggle to improve the detection of low latency forest fires and data reporting. Other projects range from identifying pedestrian flow to classifying snowflakes to measuring the effects of policies on social distancing and wearing masks during a pandemic.

“Indeed, most HPCs are focused on the entrance deck – some of the data you receive, then you calculate and visualize,” Beckman said. “Clearly, the future of large HPC systems is in the process of being processed, where the data will come in, will be processed, and it’s the direct emission from the edge that executes the first layer of that HPC code.”

“Each group we talk to about final calculations has a different idea. That’s what was so much fun in the concept of a software-defined sensor, “he added. “The ability to run this software stack on end devices and report by doing AI on the edge is a very new area and we are interested to see new uses and what problems you may have – what ways to connect your supercomputer to the edge. “

Cover photo: Waving knots. Photo courtesy of Argon National Laboratory.

Comments are closed.