Rolling the dice on network slicing: Kubernetes sparks a rethink of 5G edge

Multi-access Edge Computing (MEC) was pitched like this: Virtualization can make a selected part of a very broad and distributed data center cloud look like the entirety of an enterprise data center network. Already, this seems like a trick that Amazon AWS, Microsoft Azure, Google Cloud, and the others pull off with ease.

“The key element in the MEC architecture is the MEC host,” stated a 2018 white paper published by the European standards group ETSI [PDF]. The paper went on to define a MEC host as “a general-purpose edge computing facility that provides the computing, storage and other resources required by applications such as IoT data preprocessing, VR/AR, video streaming and distribution, V2X, etc.”With a bit of marketing prestidigitation, the telecommunications industry could get into the cloud data center game without having to follow the lead of Equinix, Digital Realty, and their ilk, and enter the commercial real estate market. They could use the real estate they already own or lease for their base transceiver stations (BTS), subdivide their data center installations amongst a plethora of smaller buildings (micro data centers, or µDC), and leverage the fiber optic data network they’re already building to provide the backhaul they need for 5G Wireless, to provide the virtual connections these facilities would need, so they appear contiguous to commercial customers. A highly diversified network of prefabricated tool sheds could appear no different to the customer than a hyperscale cloud facility.

Or, as is their wont, telcos may take MEC in an entirely different direction, both physically and virtually speaking. With the flip of a switch called local breakout (LBO) — a physical switch that they own — they could direct traffic into their own facilities, which are not “micro” by any means. Those facilities could then serve as gateways to familiar public cloud services, as has been the case for Verizon with AWS’ Wavelength service since late 2019, and AT&T with Google Cloud the following March.

“You have to be able to provide the same types of capabilities you would have in a traditional data center hosting environment,” explained Thierry R. Sender, Verizon’s director of edge computing strategy. “I wouldn’t necessarily say it’s a colo. . . but it’s a full-on data center environment.”

To become a phenomenon with anywhere near the scale of cloud computing, edge computing needs to be scalable. Whatever ends up being responsible for orchestrating the system’s workloads needs to perceive the system as a whole as something more than the sum of its parts. That’s hard when your deployment facilities are small by design, and separated by hundreds of miles of fiber optic cable, the vast majority of it linking BTS facilities to one another, rather than customer premises.

It might make sense — perhaps — if dozens, and potentially hundreds, of smaller facilities throughout the world were capable of being networked together. You could use every edge collectively like one big cloud, or selectively like a cafeteria, depending upon the requirements of each workload at the time. Or perhaps, alternately, it might be more convenient for telcos in particular if all the edges were conveniently folded into one giant shape, and co-located — to borrow a phrase — in one or two existing facilities.

Minions

Not everyone bought into the 5G MEC pitch. To see also : A Superheterodyne Receiver With A 74xx Twist. An edge-merging capability such as this would need to rely upon a degree of service-provider agnosticism that security-intensive telcos simply cannot permit.

Network slicing is the subdivision of physical infrastructure into virtual platforms, using a technique perfected by telecommunications companies called network functions virtualization (NFV). As originally conceived, NFV was a way for the functions that telcos made available to consumers through their own data networks to become portable and deployable on-demand at or near the customer’s point-of-contact. It was telcos’ first attempt at putting edge computing to work for them.

Throughout 2018 and 2019, AT&T executives and engineers declared that slicing their networks into company-owned and customer-leased segments (and reserving NFVs only for the former segment) may have been both legally and technically impossible to achieve. They may have been right. But amid the least hopeful year in many folks’ memories, 2020 gave rise to what seemed to be a workable model for containerized network functions (CNF): a way to orchestrate a highly-distributed, multi-tenant network, Kubernetes-style, whose area maps would look less like stripes than freckles. The open source community could once again hold the key.

Surprise: CNF isn’t a dance in the park either. As Kubernetes’ own co-creator Craig McLuckie told me last July for Data Center Knowledge, when realized on a telco scale, CNF would require an entirely different orchestration method than Kubernetes uses for the enterprise — essentially validating AT&T’s earlier objections.

Last November, one attempt to derive such a model emerged. Called Clovisor, and championed by a Google software engineer named Stephen Wong, it’s an effort to extend a reliable service mesh between telco networks in such a way that it includes the control plane of the virtual network. This is the part that’s separated from the data plane, and that determines how packets are forwarded, and to where.

Stephen Wong, Google software engineer.

“Going back to MEC, or any kind of edge data center or micro data center, you are trying to run single applications, whose components expand across both edge and cloud,” explained Wong, introducing his work during the most recent KubeCon virtual conference. As an example, he cited a machine learning system whose inference engines — the components that determine which parts of the ingested data are worth learning — are distributed across a very broad area, but whose neural net is singular and running on a centralized cloud platform.

“It makes a lot of sense to run a single mesh across the cloud and edge sites, for this kind of application,” continued Wong. “Once you do that, you have a consistent network policy and telemetry model, across single applications.”

It’s something MEC’s designers hadn’t yet taken into account when they conceived it during the 4G LTE era: Distributed applications are bigger than networks. You could make a case for slicing telco clouds into strips and apportioning one strip per tenant, if all applications were singular virtual machines — gelatinous blobs traversing network pipes and emerging intact.

But what the consumers of a distributed cloud service presently want — and may actually be willing to pay a premium for — is a way to selectively geo-position the pieces of applications that actually need to be distributed — and not the entire package. This would require some type of framework capable of supporting the sum of all infrastructure that hosts any part of a distributed application.

Wong points to service mesh as an enterprise-class architecture that enables a mode of service discovery: a way for functions in a network to find one another, connect, and exchange data. Such an architecture makes perfect sense in a world where the enterprise is the entire network. But when the base of the network has to be striped, and then stripes have to be doled out to individual tenants, this particular architecture of service mesh may become not just improbable, but perhaps exactly as impossible as AT&T first suggested.

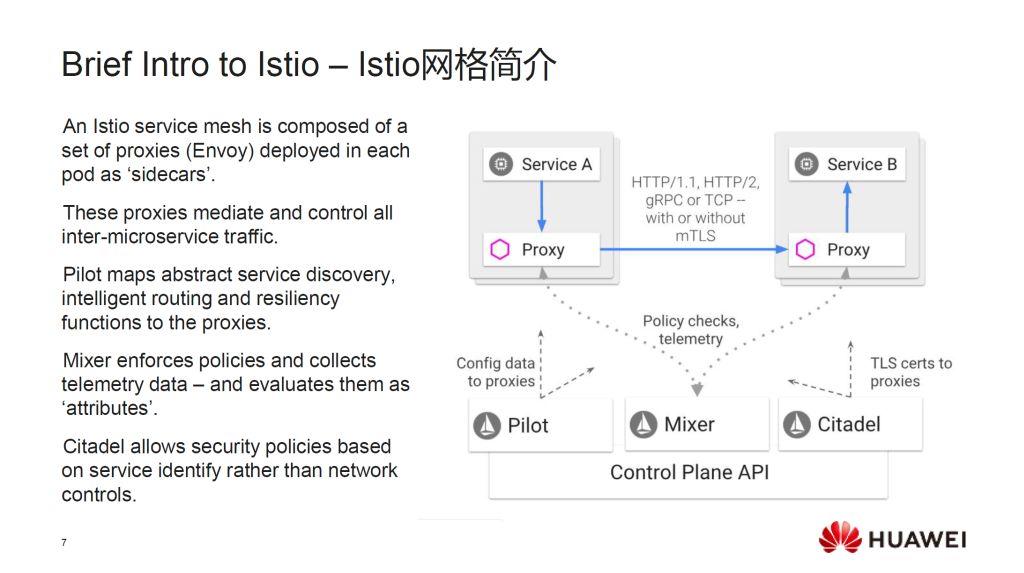

For a network control plane this complex, he explained, it would appear better to have a policy arbiter that’s centralized, making it easier to scale. One project that tried this approach in 2018 was championed by Huawei, as part of a project it marketed at the time as the Service-Oriented Core (SOC). As part of this concept, a system called Clover (from which Clovisor would later flow) would establish a service mesh-based framework that re-envisioned virtual network functions (VNF) not just as CNFs, but as fully-distributed microservices.

Slide from a 2018 presentation by Futurewei Technologies, the US-based research arm of Huawei.

In a Kubernetes cluster, pods are the containment units for active functions. Clover apportioned each pod its own sidecar, which served as a proxy for communicating with the broader service mesh. Avoiding a re-invention of the proverbial wheel, Clover used the existing Istio service mesh, which uses Envoy sidecars. The telemetry and policy functions for each proxy sidecar would be funneled through an Istio component called Mixer. Deployed cleverly, Mixer could be configured pretty much on-demand, changing its stripes, if you will, to serve a variety of benefactors concurrently. Think of a traffic cop that could change uniforms whenever different districts’ cars showed up at the intersection, and you get a bit of the idea.

If you’re familiar with the kinds of bottlenecks that produce the weakest links in a network, you already see where this discussion is heading. “Just by hearing that,” said Wong, who served as Clover’s project team leader, “you would know that it’s actually pretty terrible to run that on the edge.”

The edge outside the cloud

When we discussed the topic of 5G MEC last September, we referred to the structure to which it gave rise as an “edge cloud.” As always, there are various permutations as to what that phrase means, depending upon who utters it. But the general idea is, an edge cloud would bring disparate hosts across multiple edges together collectively as a single, variable cluster — as fuzzy as the cloud.

It’s much harder to draw a fuzzy analogy than to orchestrate a fuzzy cluster. This may interest you : Massive Growth of Software Defined Radio Market by 2027.

“The edge — the way I would describe it is as a geo-caching architecture,” explained Vijoy Pandey, Cisco’s vice president of engineering, in a recent interview. “It’s a set of services, on top of which you can build applications. But what kinds of applications make sense there? The resources that are available to those services, differ widely from a cloud, to on-prem, to all kinds of edge locations all the way down to a camera or phone. So why would I place an application in place X versus place Y? That’s a decision somebody has to make. Why can’t I place everything in my data center, or in the public cloud, or in a branch location? There is a reason to pick-and-choose one of these things.”

Just before the pandemic hit, Pandey explained to us that microservices (the small parts of distributed applications) are being spread thinner and thinner across networks. To the software developer as well as to the end user, the connections between them need to be invisible. The network or networks that bind them, should be transparent.

Now Pandey takes this idea further, suggesting that edge architecture may be neither about the customer nor the service provider, but instead the application.

For example, he cited an unnamed customer of Cisco’s Meraki cameras that happens to operate thousands of coffee houses worldwide. This customer has designs to utilize these cameras for remote inventory maintenance, for ensuring mask wearing during the pandemic, and occasionally peeking in how well the baristas are serving their customers. Theoretically, it would be a single application with perhaps the most distributed edge deployment of any retail operation, anywhere on the globe.

“All of these things have a direct revenue value on their business,” the engineering VP continued. “Now, you cannot take this across hundreds of thousands of stores globally, and make it work in a public cloud model. It’s just not going to scale. By the time that insight comes back, the customer’s already gone.”

While many in this business tout the need to keep things simpler for the software developer, arguably, a deployment model which separates the data gathering function of a real-time video analysis application, from the frame-by-frame analysis function, would be the simplest for a team of developers to devise. But from the standpoint of deployment and maintenance (the “-Ops” side of DevOps), all that back-and-forth would be difficult enough for a small retail chain, let alone a coffee colossus with 33,000 worldwide locations.

What would make this even harder would be the requirement to share the same network with the telecommunications provider that owns it.

For 5G MEC to work, customer applications would not only need to be concurrently orchestrated. They would be pressed to share space and time with telcos’ own 5G core components such as the Radio Access Network (RAN), along with all the other services that find themselves incorporated into the 5G Wireless portfolio. As of now, the questions of which services get divided into slices, as well as how that happens and who’s responsible, remain unresolved.

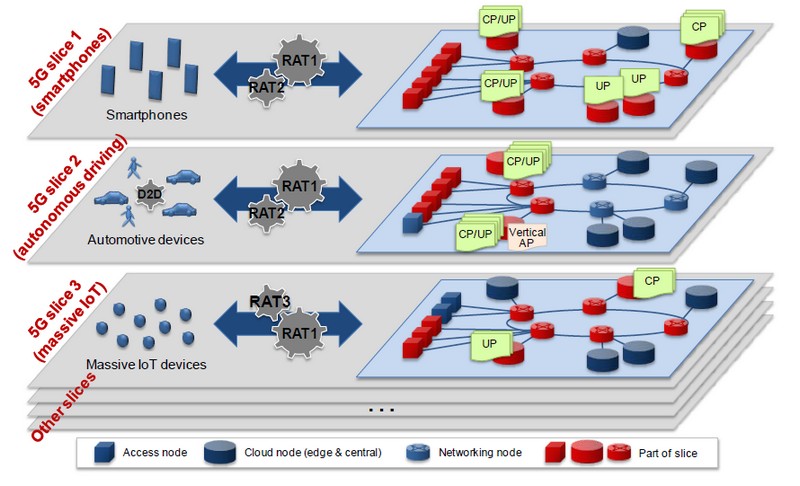

One scenario mobile operators envision for 5G network slicing.

Next Generation Mobile Networks Alliance

T-Mobile and others have suggested that network slices could divide classes of internal network functions — for instance, compartmentalizing eMBB from mMTC from URLLC. Others, such as the members of the Next Generation Mobile Networks Alliance (NGMN), suggest that slices could effectively partition networks in such a way (as suggested by the NGMN diagram above) that different classes of user equipment, utilizing their respective sets of radio access technologies (RAT), would perceive quite different infrastructure configurations, even though they’d be accessing resources from the same pools.

Another suggestion being made by some of the industry’s main customers, at 5G industry conferences, is that telcos offer the premium option of slicing their network by individual customer. This would give customers willing to invest heavily in edge computing services more direct access to the fiber optic fabric that supports the infrastructure, potentially giving a telco willing to provide such a service a competitive advantage over a colocation provider, even one with facilities adjacent to a “carrier hotel.”

“I believe it will become the norm,” remarked Verizon’s Sender, “that we will have micro-edge implementations; private, on-site implementations; MEC in the public network; regional, local capabilities, all across the compute environment, all supporting one use case for our customer. These things don’t really compete; they’re complementary.”

There are diametrically split viewpoints on the subject of whether slicing could congregate telco functions and customer functions together on the same cloud. Some have suggested such a convergence is vitally necessary for 5G to fulfill the value proposition embodied in China Mobile’s original proposal for Cloud Radio Access Network (C-RAN). Architects of the cloud platforms seeking to play a central role in telcos’ clouds, such as OpenStack and CORD, argue that this convergence is already happening — which was the whole point to begin with.

AT&T has gone so far as to suggest the argument is moot and the discussion is actually closed: Both classes of functions have already been physically separated, not virtually sliced, in the 5G specifications, its engineers assert. It launched its own 5G MEC initiative in January 2019 statement, stating at the time, “The data that runs through AT&T MEC can be routed to their cloud or stay within an enterprise’s private environment to help increase security.”

Yet AT&T may yet wish it had not attempted to close the issue so soon. 5G’s allowance for smaller towers that cost less and cover more limited areas is prompting ordinary enterprises to seek their respective governments’ permission to become their own telecommunications providers, with their own towers and base stations serving their own facilities.

Divide and conquer

In March 2019, Germany’s Robert Bosch GmbH launched a partnership with Qualcomm, enabling the manufacturer to apply for and receive dedicated spectrum from German government authorities. Evidently aggravated with the pace of the network slicing argument, Bosch hard-wired its own 5G Wireless and 5G MEC services for its own factories. Auto maker Volkswagen followed suit the next month, apparently for the same reasons. These may represent the most extreme edge computing use cases, said Pandey. But they didn’t take back the power of their own communications systems from telcos, just to hand it over to a different class of tech giant.

“They want all that decision-making to happen within that edge manufacturing location,” said Pandey. On the same subject : Space Micro’s Five Contracts From U.S. Government Programs in Q1 2021 – SatNews. “It depends on what the edge vertical is, but all of these things have the same bottom line: There is a volume of data, there is a cost of doing business on a volume of data, and there is a cost of shuffling that data across [from edge to cloud and back].”

For years, the viability of network security policies depended upon hard compartmentalization. One network couldn’t be leveraged to break into another network, if there were no connections between them. Likewise, for applications that build their own virtual networks around themselves, one app can’t be leveraged to break into another app, if they don’t share the same namespace.

In 2015, VMware turned this entire idea on its head, with a concept called microsegmentation. Rather than dividing networks into large segments, the policies that determined what data gets routed where could be written in such a way as to only recognize restricted sets of addresses for each application, as though all the other addresses didn’t exist. Imagine a satellite map of a city where all the unimportant houses and buildings disappeared, redacted from history, but you don’t notice it because you ignore unimportant things by design. You can’t break into something you don’t believe in.

Since that time, this model has been expanded into newer, bolder security models such as the Software-Defined Perimeter (SDP). A network’s entire structure can be defined by policy alone: by a set of rules that, by restricting access only to what exists, implies that nothing else does.

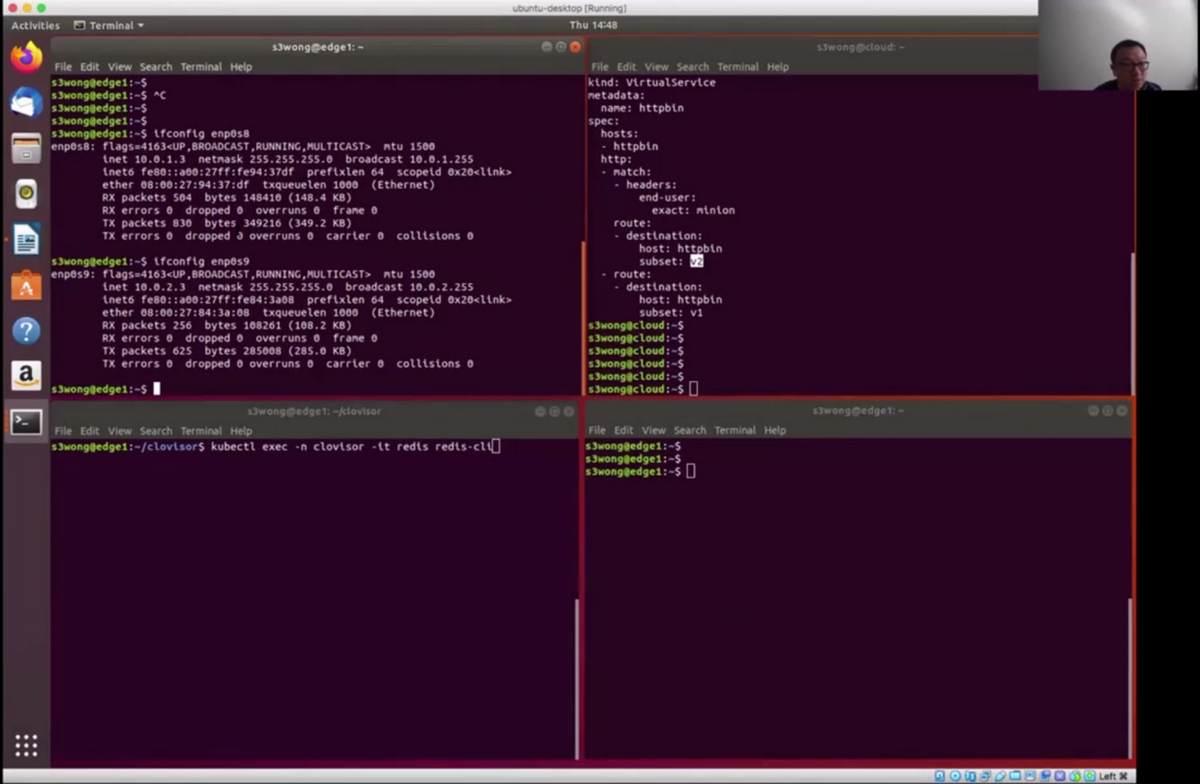

In this frame from his Clovisor demo, Stephen Wong shows how Clovisor running in the upper left node successfully injects network routes into three isolated nodes, starting with the upper right node.

This is essentially how Clovisor works: by “injecting” rules directly into each pod’s map of the network, using a policy mechanism rather than some administrator-only override.

For Huawei’s SOC vision to be viable, such a network map would need to be centralized within Istio’s Mixer component. Maybe, like Cisco’s Pandey projected, the central location for Mixer would be picked-and-chosen, but that would probably happen just once, and that choice would likely be a public cloud platform.

And as Wong further explained, that would be a bad idea.

“If all your [policy] requests have to go to the cloud just to get Mixer to say yes,” remarked Wong, “that’s the kind of delay that’s completely unacceptable.”

It turned out this rule holds true across the board, for any application that makes use of a service mesh. By the end of last year, Mixer was officially deprecated as a part of Envoy. In its place is a mechanism that enables Clovisor to safely inject the ingress and egress points of the mesh, directly into pods. This way, each pod manages its own version of the active policy — not unlike the way DNS servers maintain local maps for resolving domain names to IP addresses.

By decentralizing the control plane mechanisms, Clovisor could conceivably give Bosch, Volkswagen, and enterprises of their scale and caliber, exactly the tools they need to manage their communications systems and edge computing platforms, on their own terms. And that’s a problem, at least for the original, would-be vendors of 5G MEC services — the communications service providers that bought into China Mobile’s all-out cloud migration plan in the beginning.

The solution to the network striping issue — if, indeed, that’s what this is — may borrow a page from microsegmentation. Instead of a hard subdivision made physically real by a toggled circuit breaker, Clovisor or something like it could create edge networks out of bits and pieces of pods, segmented and isolated on an as-needed basis.

Yet here is how the outcome of this solution changes everything: MEC started out as a way for telcos to get into the cloud services business, opening up new revenue channels using commercial customers. Unable to break into a market dominated by AWS, Azure, Google Cloud, and to a far lesser extent, “Other,” telcos settled for partnering with existing cloud service providers, offering customers commercial data services as part of their 5G contracts. But that business model is only viable if cloud functionality is the service being consumed by communications, and not vice versa.

If 5G MEC ends up looking like a culmination of Clovisor, edge deployments could instead end up as premium options for existing commercial cloud service contracts. In other words, it wouldn’t be AT&T, Verizon, or T-Something that sends the bill to its phone-using customers, but instead AWS, Azure, and GCP inserting line-items into their SLAs. Economically speaking, telcos would find themselves tossed into the back seat. Sure, they’d have new revenue sources, but it wouldn’t be the same as drumming up excitement and enthusiasm for something else with the “5G” moniker. And perhaps the one thing that ends up going right for 5G these past several years, would see someone else soaking up all the credit.

[Portions of this article are based on material that appeared in a previous edition of a ZDNet Executive Guide to 5G Wireless, which has since been revised.]

Comments are closed.