NVIDIA: Data centers are getting smarter, but also get less complex thanks to intelligent networking hardware

Kevin Deierling, SVP of marketing for the networking business at NVIDIA talks with host Bill Detwiler about how the data center is evolving and some common misconceptions about the modern data center on this episode of Dynamic Developer.

As the modern data center gets more intelligent, you might think it would also get more complex, but that’s not the case. In this episode of Dynamic Developer, I talk with Kevin Deierling, senior vice president of marketing for NVIDIA Networking about the evolution of the data center and some common misconceptions about modern data centers. The following is a transcript of the interview, edited for readability. You can listen to the podcast player embedded in this article, watch a video above, or read a transcript of the interview below, edited for readability.

NVIDIA is getting into the networking business

Bill Detwiler: Most people don’t necessarily think of NVIDIA as a networking company. Everyone knows about the graphics cards and the GPU’s and even them being more in the data center and high-performance computing, it’s super computing because we’re using GPU’s to do things like that, but as a networking company, not so much. This may interest you : Comprehensive Report on Software Defined Radio Market 2021. Give me the rundown about how NVIDIA is getting into the networking business and their thoughts on networking going forward.

Kevin Deierling, Senior Vice President of Marketing, NVIDIA Networking

Kevin Deierling: I think NVIDIA acquired Mellanox Technologies earlier this year–we closed that in April [2020]. That’s where I came from. Mellanox is really the leader in high-performance networking both with our InfiniBand technologies, which is HPC and artificial intelligence (AI) computing, but also with Ethernet. If you look at the highest performance Ethernet, at the 25Gb, at the 100Gb and even now 200Gb and 400Gb, Mellanox was the leader in network adapters at those speeds. Now, NVIDIA has really incorporated all of that and it’s part of their larger vision of what the data center is. We even have Ethernet switches that form the fabric of that data center and it really has to do with the changes that are happening in the data center.

SEE: Top 5 programming languages for network admins to learn (free PDF) (TechRepublic)

Common misconceptions about data center networking

Bill Detwiler: It’s interesting we’ve come such a long way from the old 10/100 cards and 10/100 switches that I remember installing or working on Token Rings so, I’ll just date myself there, but I think that’s a great point you make towards the end. I’d love to hear more about that, is that networking, in general, really has changed over the last several decades and especially in the last few years. Read also : Abaco Systems has a $3M design win. What are some of the misconceptions that people have about networking either in the data center, or just outside the data center, that they kind of need to change their thinking around?

Kevin Deierling: I think one of the misconceptions people have is that as the network gets more intelligent, it becomes more complex and, in reality, it’s actually the reverse. One of the things that we’re doing with our DPU is we’re adding more and more intelligence into the network adapters so we have a processor in there, and we have a whole bunch of really powerful programmable acceleration engines that allow us to do software-defined networking, software-defined security, software-defined storage and management and really by implementing all of that in the DPU. We actually make the job of the network administrator much simpler.

Before, there were all kinds of things that were needing to be done in the network fabric and on the switches. Now, we’re shifting that to one on the DPU, and there’s two giant benefits of moving that intelligence into the DPU: First of all, it makes the fabric the switches much simpler to operate, deploy and manage because they’re just really fast and efficient pipes that move in data, you don’t have to have all of the complex network configuration. The second thing is it becomes intrinsically scalable because if you’re putting the intelligence into every note, if your computer is now the data center, if that’s the new unit of computing, as you add more computer servers you’re adding more capabilities in the network. It naturally scales in a very efficient way. I think the combination of having more intelligence in the DPU as the network connectivity in the server actually makes everything better; it scales better and it makes it simpler to manage.

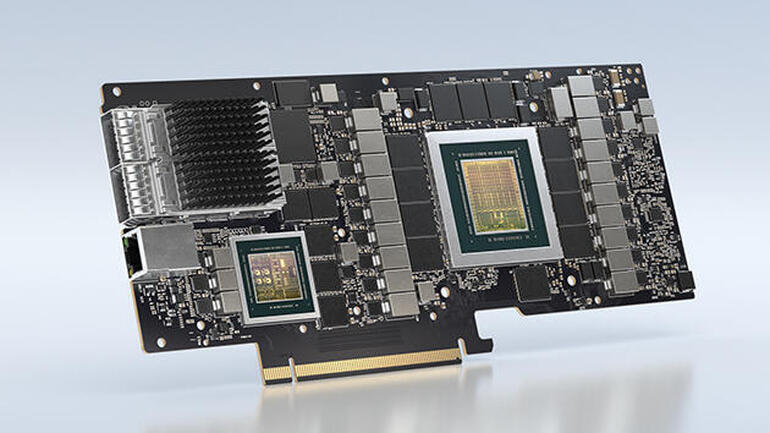

NVIDIA BlueField-2X AI-Powered DPU

Image: NVIDIA

SEE: Storage area networking (SANs) policy (TechRepublic Premium)

Bill Detwiler: That’s a really interesting way to describe it because it reminded me as you were painting this picture of the data center of the way we used to think about CPU’s, where you had a single core. However, it was solving the problem, but you were still using a single thing–that was just the entity the object you were using to solve the problem. Then we expanded within the PC to using a GPU or using other bus. The way you’re talking about networking, or the way we were talking about the internal mechanisms of a computer, how the data moved between the different units. Now, it’s just taking that picture, that concept, and moving it to a larger physical manifestation, which is the data center where you have multiple systems, multiple processors and multiple machines and tying into storage and those connections, those buses, those interfaces that were on a single circuit board now have to be wires and fiber cables and fill up entire rooms or even larger geographic structures. Does that sound like maybe a pseudo accurate way to describe it?

Kevin Deierling: I think it’s a beautiful way to describe it. It really is a continuum that we’ve been experiencing going from a single core CPU to multi-core CPUs, and now GPUs were really thousands of computer elements, and now we’re expanding to the entire data center. Instead of putting that on a backplane on a PC board with buses doing that, we actually need to build cables and Ethernet switches and InfiniBand switches, and intelligence adapters, NICs and SmartNICs with the DPU and build all of that together to really form that back point, if you will, of the entire data center.

DPUs are improving the data center

Bill Detwiler: I take it that a lot of new technology over the last several years has had to be developed to accommodate that. We just think about networking speeds. That type of interconnectedness wasn’t possible if you were still running old 10Mb connections, or even 100Mb connections, you need the Gigabit Plus, like you said, even the 250 the 150Gb connections to be able to move the data that quickly. This may interest you : Software Defined Radio Communication Market 2021 | COVID -19 Impact Research Report With Top Key Players. You mentioned a new technology that I know Mellanox and NVIDIA is now working on, which are these DPU’s. Tell me about them and tell me what they allow you to do in the data center that we couldn’t do before.

Kevin Deierling: Together with the CPU and the GPU, the data processing unit, or DPU, really forms the new trinity of this data center as a computer. The CPU is really good at running applications. With single-threaded applications, it offers good performance, but there are a lot of things that it can’t do well. Whether it’s graphics processing, but more importantly now in the data center, AI and machine learning, you need that massive parallelism that the GPU has. Similarly with the DPU, those functions that the CPU just doesn’t perform well that will actually bring the most powerful CPU and a server to its knees. If you’re doing very packet intensive processes, whether that’s for streaming video, doing encryption, or compression of firewalls and load balancers, and as we move to this entirely software-defined data center, software-defined networking, software-defined storage and software-defined security, we think that DPU can perform all of that.

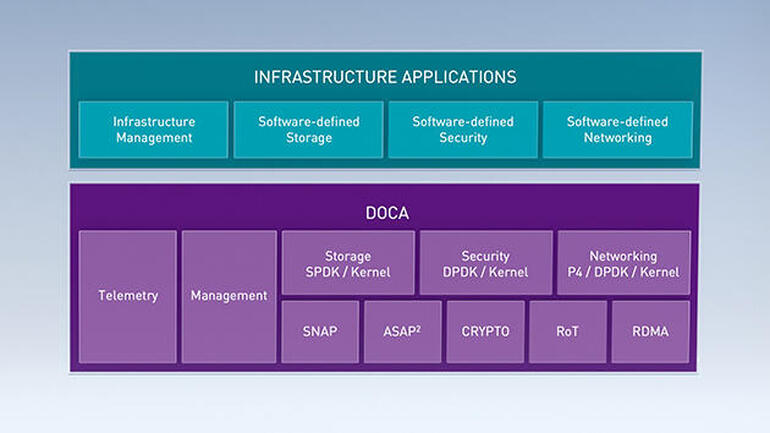

NVIDIA DOCA SDK – Data Center Infrastructure on a Chip Architecture

Image: NVIDIA

We offload, accelerate and isolate all of those software-defined functions onto the DPU. It’s a really powerful addition into this data center or computing, and it adds a whole bunch of intelligence.What it does is frees the CPU and the GPU up to run applications. It actually pays for itself–it’s an extremely efficient way to take advantage of the resources that you have in the data center and get the most out of them. It really returns all of the application processing back to the CPU because the DPU is handling all of the low-level packet processing, compression, timing critical functions that we need to do so, it’s a really powerful combination, this trinity of the CPU, the GPU and the VPU.

SEE: Hiring Kit: Back End Developer (TechRepublic Premium)

Every business is going to become artificially intelligent

Bill Detwiler: I think that as we talk about the modern data center and how it used to be in the old days. Twenty years ago, every company had a data center, they had a server room somewhere, they had a knock and they had in-house staff that did that, but we’ve slowly been transitioning to either a hybrid model where you have a smaller scale version of that and some of the infrastructure is in the cloud, some of the compute, some of the storage is in the cloud, so I would love to hear your take on where the market for this type of technology is?

Is it just the large data center operators the cloud operators or the local data center operators, or do you see it also having a place obviously in large organizations, research organizations that use supercomputers, high-performance computing, but do you see a place for it as we still have this hybrid model in an on-prem data center of maybe the enterprise but not someone that you would traditionally think of as maybe someone that is using supercomputers? Who do you see using this type of technology right now, and maybe in the future?

Kevin Deierling: We think every business is going to become AI. Artificial intelligence is really transforming all businesses and for a variety of reasons there are still data centers, and whether that’s for data gravity reasons, or latency reasons, or legal reasons in terms of maintaining your confidentiality and keeping data on-premises, reading for cost reasons that the data center itself is not going away, the enterprise data center is not going away. On top of that we’re seeing an explosion in edge applications with 5G has new capabilities, IoT devices, et cetera, and all of those will be enabled by AI. Whether it’s healthcare or robotics or automotive or natural language processing, or managing a recommendation engine in a retail environment, all of those businesses will benefit and become AI because it’s just an imperative to succeed today, to have a digital marketing place that leverages AI.

For all of those reasons, it will definitely still be there. On top of that, it needs to co-exist with the business that you can put into the Cloud. So you’re not going to bring everything on-premises. You’re not going to leave everything on-premises. Some things are going to be in the cloud and some are going to be on-premises and so you need to be able to deal with that hybrid model in a very effective way–that’s something that we’re really good at.

COVID is changing how networks are used and how we communicate

Bill Detwiler: With the move to remote work and people working at home is… We’re not going back to the days where you needed a server in your basement. We’re not going back to the days necessarily when you had to have $20,000 worth of computing equipment in your house but we do see a need for–I think it was Dell I was talking to–there is some thinking around, you’re going to have these localized nodes that are going to do some of that heavy lifting on the edge and then because it all can’t be moved back to the data center and people’s connections are just not as good. Right?

Kevin Deierling: I think the whole working from home phenomenon in this COVID era is really changing the way we need to use our networks and really communicate with each other today, whereas video conferencing was first invented back in 1964 was first demonstrated that the AT&T World’s Fair. And I was involved with this in the 1990’s and we used to talk about it as the technology of the future. We said it was always going to remain the technology of the future. It seemed like nobody was going to ever adopt it and use it. Here we are today where your people are talking to grandparents and children, everybody in business is being driven on all sorts of online platforms.

It’s fundamentally accelerated the adoption of these new types of working from home platforms whether this is video conferencing or even data streaming and video streaming the way people are watching movies now you don’t to the theater–really everybody’s streaming data. We’ve done things like Maxine, which is our platform for really improving the way video conferencing works. We’re adding new features and new capabilities that improve audio that improves video–it makes things much sharper in terms of the images that makes the audio much clearer. We do things for content distribution networks at the edge.

During that last mile connectivity into your home, everybody’s home has a little bit different connection. Some of them are faster and slower and it turns out you need to figure out what that is and deliver the video to each person adding exactly the speed they need and now you need to do that to 100,000 homes. It turns out those are really hard problems to solve with a CPU trying to manage all of that. So we do that in the network. We have smart networking that can actually do video conferencing, we have smart DPU’s that can improve video and audio and it’s really taking the infrastructure that we have today and making it work better for everybody who’s working at home now.

SEE: Future of 5G: Projections, rollouts, use cases, and more (free PDF) (TechRepublic)

AI, 5G, autonomous driving and other technologies are shaping the data center

Bill Detwiler: You’ve mentioned AI several times, we’ve talked about SDN, we’ve talked about some of the other technological advances that are driving the need for these increased network speeds and better management of data as it flows through the data center, what are the biggest changes that you see in the near term and further out with AI? You also mentioned edge, right? You mentioned IoT and 5G. We’ve often seen this push and pull, or sort of a wax and a wane, in terms of what can be processed in the cloud and what needs to be done at the edge. It seems like even though we get faster connections and there’s still more and more that’s being done on the edge.

AI has a lot to do with that. We’re hoping 5G with its high bandwidth low latency will help enable some of these technologies like AI, autonomous driving, things like telehealth, like you talked about, but it definitely seems that as we get some of these enabling technologies that we discover new problems or we discover new ways like, ‘Oh, we didn’t think about this when we wanted to use AI in this way.’ I’d just love to hear your thoughts in terms of how those technologies are continuing to kind of shape the data center in the next five years or so.

Kevin Deierling: I think it’s a great observation that some of these things are literally constrained by the speed of light; just how long it takes for data to move, whether it’s through a fiber being transmitted at the speed of light. With those, they need to be at the edge and people aren’t thinking about it, but you mentioned autonomous driving–those decisions that need to be made in the car. You can’t go back to the cloud to decide that you need to apply the brakes because there was somebody walking through the crosswalk in front of you, though you’re going to transport a data center and an AI supercomputer into a car. That’s how autonomous driving is working. There are inferencing engines that are making split-second decisions, and you simply can’t afford the latency. There are other applications that may need to compress the data before it’s sent for further processing in the cloud.

Again, AI can do those kinds of things, but I think one of the really important things that you asked was, we can’t anticipate all of the things that may come out of that, just as nobody anticipated that we would have new businesses for on-demand driving, where you could go call up a Uber or a Lyft. The taxi business was really impacted dramatically. Nobody really anticipated that when we came out with smartphones more than a decade ago, and by the same token, 5G is going to create new opportunities because of its higher bandwidth and lower latency and the ability to do network slicing in ways that frankly we can’t imagine thinking about all the different things, but there are going to be applications that we’ll scratch our heads and say, ‘Why didn’t I think of that?’ From an NVIDIA perspective, I think what’s important is that we have a software platform that’s more than just the hardware underneath everything we’re doing: software-defined radios.

We have what’s called Ariel, which are 5G radios that are programmed on top of a general purpose accelerated computing platform that we call EGX. With that, we don’t know what the new cool AI enabled accelerated computing applications are going to be, but we have a platform that does 5G and whatever else shows up. Whether it’s telemedicine or health services or automatic driving, whether it’s Isaac, which is our robotics platform, we’re excited that we have this general purpose accelerated computing platform with EGX. That’s going to address all of these markets and one of those is really going to become an explosive app that really drives 5G. I just don’t know what it is yet.

Comments are closed.